The age of generative AI and the large language model

It looks like generative AI will be broadly deployed across industries, in education, science, retail and recreation. Everywhere.

Current discussion ranges from enthusiastic technology boosterism about complete transformation to apocalyptic scenarios about AI takeover, autonomous military agents, and worse.

Comparisons with earlier technologies seem apt, but also vary in suggested importance. (Geoffrey Hinton wryly observed in an interview that it may be as big as the invention of the wheel.)

Like those earlier technologies - the web or mobile, for example - progress is not linear or predictable. It will be enacted in practices which will evolve and influence further development, and will take unimagined directions as a result. It has the potential to engage deeply with human behaviours to create compounding effects in a progressively networked environment. Think of how mobile technologies and interpersonal communications reshaped each other, and how the app store or the iPod/iPhone evolved in response to use.

The click-bait is strong, and it can be difficult to separate the spectacular but short-lived demonstration from the real trend.

It is likely to become a routine part of office, search, social and other applications. And while some of this will appear magic, much of it will be very mundane.

The outcomes will be productive, and also problematic. There is the dilution of social trust and confidence as synthesized communication or creation is indiscernible from human forms; there is the social impact on employment, work quality and exploited labor; there is potential for further concentration of economic or cultural power; there is propagation of harmful and historically dominant perspectives.

There is of course now a large technical and research literature, as well as an avalanche of popular exposition and commentary in text, podcasts, and on YouTube. The click-bait is strong, and it can be difficult to separate the spectacular but short-lived demonstration from the real trend.

Accordingly, I include quite a few links to further sources of information and I also quote liberally. Much of what I discuss or link to will be superseded quickly.

Do get in touch if you feel I make an error of judgement or emphasis.

- Brief orientation

- Three strands in current evolution and debate

- 1. Major attention and investment

- 2. Rapid continuing evolution

- 3. Social concerns

- Conclusion

Brief orientation

Large language model

ChatGPT rests on a large language model. A large language model (LLM) is a neural network which is typically trained on large amounts of Internet and other data. It generates responses to inputs ('prompts'), based on inferences over the statistical patterns it has learned through training.

This ability is used in applications such as personalization, entity extraction, classification, machine translation, text summarization, and sentiment analysis. It can also generate new outputs, such as poetry, code and, alas, content marketing. Being able to iteratively process and produce text also allows it to follow instructions or rules, to pass instructions between processes, to interact with external tools and knowledge bases, to generate prompts for agents, and so on.

This ability means that we can use LLMs to manage multiple interactions through text interfaces. This potentially gives them compounding powers, as multiple tools, data sources or knowledge bases can work together iteratively ... and is also a cause of concern about control and unanticipated behaviors where they go beyond rules or act autonomously.

LLMs accumulate knowledge about the world. But their responses are based on inferences about language patterns rather than about what is 'known' to be true, or what is arithmetically correct. Of course, given the scale of the data they have been trained on, and the nature of the tuning they receive, they may appear to know a lot, but then will occasionally fabricate plausible but fictitious responses, may reflect historically dominant perspectives about religion, gender or race, or will not recognise relevant experiences or information that has been suppressed or marginalized in the record.

I liked this succinct account by Daniel Hook of Digital Science:

What can seem like magic is actually an application of statistics – at their hearts Large Language Models (LLMs) have two central qualities: i) the ability to take a question and work out what patterns need to be matched to answer the question from a vast sea of data; ii) the ability to take a vast sea of data and “reverse” the pattern-matching process to become a pattern creation process. Both of these qualities are statistical in nature, which means that there is a certain chance the engine will not understand your question in the right way, and there is another separate probability that the response it returns is fictitious (an effect commonly referred to as “hallucination”). // Daniel Hook

And here is a more succinct statement by Stephen Wolfram from his extended discussion of ChatGPT: '... it’s just saying things that “sound right” based on what things “sounded like” in its training material.'

I used 'text' above rather than 'language.' Language is integral to how we think of ourselves as human. When we talk to somebody, our memories, our experiences of being in the world, our reciprocal expectations are in play. Removing the human from language has major cultural and personal ramifications we have not yet experienced. I was struck by this sardonically dystopian discussion of AI a while ago in a post on the website of the writing app iA. It concludes with brief poignant comments about language as a human bridge and about what is lost when one side of the bridge is disconnected.

Generative Pre-trained Transformer

ChatGPT galvanized public attention to technologies which had been known within labs and companies for several years. GPT refers to a family of language models developed by OpenAI. Other language models have similar architectures. GPT-4, the model underlying ChatGPT is an example of what has come to be called a foundation model.

In recent years, a new successful paradigm for building AI systems has emerged: Train one model on a huge amount of data and adapt it to many applications. We call such a model a foundation model. // Center for Research on Foundation Models, Stanford

It is useful to break down the letters in GPT for context.

- The 'g' is generative as in 'generative AI.' It underlines that the model generates answers, or new code, images or text. It does this by inference over the patterns it has built in training given a particular input or 'prompt.'

- The 'p' stands for 'pre-trained', indicating a first training phase in which the model processes large amounts of data without any explicit tuning or adjustment. It learns about patterns and structure in the data, which it represents as weights. In subsequent phases, the model may be tuned or specialised in various ways.

- The 't' in GPT stands for 'transformer.' Developed by Google in 2017, the transformer model is the neural network architecture which forms the basis of most current large language models (Attention is all you need, the paper introducing the model). The transformer defines the learning model, and generates the parameters which characterise it. It is common to describe an LLM in terms of the number of parameters it has: the more parameters, the more complex and capable it is. Parameters represent variable components that can be adjusted as the model learns, during pre-training or tuning. Weights are one parameter; another important one is embeddings. Embeddings are a numerical representation of tokens (words, phrases, ...) which can be used to estimate mutual semantic proximity ('river' and 'bank' are close in one sense of 'bank', and more distant in another).

Three further topics might be noted here.

First, emergent abilities. It has been claimed that as models have scaled, unforeseen capabilities have emerged [pdf]. These include the ability to be interactively coached by prompts ('in context learning'), to do some arithmetic, and others. However, more recently these claims have been challenged [pdf], suggesting that emergent abilities are creations of the analysis rather than actual model attributes. This question is quite important, because emergent abilities have become a central part of the generative AI narrative, whether one is looking forward to increasing capabilities or warning against unpredictable outcomes. For example, the now famous letter calling for a pause in AI development warns against 'the dangerous race to ever-larger unpredictable black-box models with emergent capabilities.' BIG-bench is a cross-industry initiative looking at characterizing model behaviors as they scale and 'improve' [pdf]. I was amused to see this observation: 'Also surprising was the ability of language models to identify a movie from a string of emojis representing the plot.'

Second, multimodal models. Generative models are well established, if developing rapidly. Historically models may have worked on text, or image or some other mode. There is growing interest in having models which can work across modes. GPT-4 and the latest Google models have some multi-modal capability - they can work with images and text, as can, say, Midjourney or DALL-E. This is important for practical applications reasons (doing image captioning or text to image, for example). It also diversifies the inputs into the model. In an interesting development, Meta has released an experimental multi-modal open source model, ImageBind, which can work across six types of data in a single embedding space: visual (in the form of both image and video); thermal (infrared images); text; audio; depth information; and movement readings generated by an inertial measuring unit, or IMU.

And third, embeddings and vector databases. Embeddings are important in the context of potential discovery applications, supporting services such as personalization, clustering, and so on. OpenAI, Hugging Face, Cohere and others offer embedding APIs to generate embeddings for external resources which can then be stored and used to generate some of those services. This has given some lift to vector databases, which seem likely to become progressively more widely used to manage embeddings. There are commercial and open source options (Weaviate, Pinecone, Chroma, etc.). Cohere recently made embeddings for multiple language versions of Wikipedia available on Hugging Face. These can be used to support search and other applications, and similarity measures work across languages.

LLMs do not have experiential knowledge of the world or 'common sense.' This touches on major research questions in several disciplines. Yejin Choi summarizes in her title: Why AI Is Incredibly Smart — and Shockingly Stupid.

Three strands in current evolution and debate

I focus on three areas here: major attention and investment across all domains, rapid continuing evolution of technologies and applications, and social concerns about harmful or undesirable features.

1 Major attention and investment

Major attention and investment is flowing into this space, across existing and new organizations. Just to give a sense of the range of applications, here are some examples which illustrate broader trends.

- Coaching and learning assistants. Khan Academy has developed Khanmigo, a ChatGPT based interactive tutor which can be embedded in the learning experience. They are careful to highlight the 'guardrails' they have built into the application, and are very optimistic about its positive impact on learning. It is anticipated that this kind of tutoring co-pilot may be deployed in many contexts. For example, Chegg has announced that it is working with GPT-4 to deliver CheggMate, "an AI conversational learning companion." This may have been a defensive move; it was also recently reported that Chegg's shares had dropped 40% given concerns about the impact of ChatGPT on its business. Pearson has issued a cease and desist notice to an (unnamed) AI company who has been using its content, and has said it will train its own models.

- Consumer navigation/assistance. Expedia has a two-way integration with ChatGPT - there is an Expedia plugin to allow ChatGPT to access Expedia details, and Expedia uses ChatGPT to provide enhanced guidance on its own site. Other consumer sites have worked with ChatGPT to provide plugins. Form filling, intelligent assistants, enquiry services, information pages, and so on, will likely see progressive AI upgrades. Wendy's has just announced a collaboration with Google to develop a chatbot to take orders at the drive-thru.

- Competitive workflows/intelligence. Organizations are reviewing operations and workflows, looking for efficiencies, new capacities, and competitive advantage. A couple of announcements from PWC provide an example. It reports it is investing over $1B in scaling its ability to support and advise clients in developing AI-supported approaches. It also recently announced an agreement with Harvey, a startup specialising in AI services to legal firms, to provide PWC staff with "human led and technology enabled legal solutions in a range of areas, including contract analysis, regulatory compliance, claims management, due diligence and broader legal advisory and legal consulting services." It will build proprietary language models with Harvey to support its business.

- Content generation/marketing. Linkedin now uses ChatGPT to "include generative AI-powered collaborative articles, job descriptions and personalized writing suggestions for LinkedIn profiles." The collaborative articles I have seen have been somewhat bland, which underlines a concern many have that we will be flooded by robotic text - in adverts, press releases, content marketing, articles - as it becomes trivially easy to generate text. Canadian company Cohere offers a range of content marketing, search, recommendation, summarization services based on its language models built to support business applications.

- Code. The GitHub Copilot, which writes code based on prompts, is acclerating code development. You can also ask ChatGPT itself to generate code for you, to find mistakes or suggest improvements. Amazon has recently released CodeWhisperer, a competitor, and made it free to use for individual developers. There has also been concern here about unauthorised or illegal reuse of existing code in training sets. Partly in response, BigCode is an initiative by Hugging Face and others to develop models based on code which is permissively licensed and to remove identifying features. They have released some models, and describe the approach here.

- Productivity. Microsoft is promising integration across its full range of products to improve productivity (please don't mention Clippy). Other apps will increasingly include (and certainly advertise) AI features - Notion, Grammarly, and so on.

- Publishing. The publishing workflow will be significantly modifed by AI support. A group recently published an interesting taxonomy of areas where AI would have an impact on scholarly publishing: Extract, Validate, Generate, Analyse, Reformat, Discover, Translate. A major question is the role of AI in the generation of submissions to publishers and the issues it poses in terms of creation and authenticity. It seems likely that we will see synthetic creations across the cultural genres - art, music, literature - which pose major cultural and legal questions.

- Image generation. Adobe is trialling Firefly, an 'AI art generator, as competition to Midjourney and others.' Adobe is training the application on its own reservoir of images, to which it has rights.

- Google. For once, Google is playing catchup and has released Bard, its chat application. It is interesting to note how carefully, tentatively almost, it describes its potential and potential pitfalls. And it has been cautious elsewhere. It is not releasing a public demo of Imagen, its text to image service given concerns about harmful or inappropriate materials (see further below). However, Google's work in this area has been the subject of ongoing public debate. Several years ago, Timnit Gebru, co-lead of its internal Ethical Artificial Intelligence Team left Google after disagreement over a paper critiquing AI and language models (since published: On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?). More recently, Geoffrey Hinton, neural network pioneer, resigned from his position at Google so that he could more readily express his concerns about general AI directions, especially given increased commercial competition. And, from a different perspective, an internal memo was recently leaked, documenting what it claimed to be technical and business competitive missteps by Google. Google has since announced a broad range of AI augmentations across its product suite, including search.

- Developers, apps. As important as these more visible intitiatives is the explosion of general interest as individual developers and organizations experiment with language models, tools and applications. Check out the number of openly available language models on Hugging Face or the number of extensions/plugins appearing for applications (see the Visual Studio Code Marketplace for example or Wordpress plugins).

It is likely to become a routine part of office, search, social and other applications. And while some of this will appear magic, much of it will be very mundane.

A discussion of applications in research and education, which of course are of critical importance to libraries, is too big a task to attempt here and is outside my scope. There is already a large literature and a diverse body of commentary and opinion. They are especially interesting given their knowledge intensive nature and the critical role of social trust and confidence. The Jisc Primer (see below) introduces generative AI before discussing areas relevant to education. It notes the early concern about assessment and academic integrity, and then covers some examples of use in teaching and learning, of use as a time-saving tool, and of use by students. AI is already broadly used in research disciplines, in many different ways and at different points in the life cycle, and this will continue to grow. This is especially the case in medical, engineering and STEM disciplines, but will be apply across all disciplines. See for example this discussion of the potential use of AI in computational social sciences [pdf]. A major issue is the transparency of the training and tuning models. Results need to be understandable and reproducible. A telling example of how books were represented in training data was provided in a paper with the arresting title: Speak, Memory: An Archaeology of Books Known to ChatGPT/GPT-4 [pdf]. They found many books in copyright, and also found that some categories were disproportionately over-represented: science/fiction and fantasy and popular out of copyright works, for example. For cultural analytics research, they argue that this supports a case for open models with known training data.

The UK national agency for education support, Jisc, has a primer on Generative AI which has a special focus on applications in education. It plans to keep it up to date.

2 Rapid continuing evolution

The inner workings of these large models are not well understood and they have shown unpredictable results. OpenAI chooses a strange and telling analogy here as they describe the unsupervised LLM training - building the model is 'more similar to training a dog than to ordinary programming.'

Unlike ordinary software, our models are massive neural networks. Their behaviors are learned from a broad range of data, not programmed explicitly. Though not a perfect analogy, the process is more similar to training a dog than to ordinary programming. An initial “pre-training” phase comes first, in which the model learns to predict the next word in a sentence, informed by its exposure to lots of Internet text (and to a vast array of perspectives). This is followed by a second phase in which we “fine-tune” our models to narrow down system behavior. // OpenAI

Here are some areas of attention and interest:

A diversity of models

OpenAI, Google and Microsoft and others are competing strongly to provide foundation models, given the potential rewards. Amazon has built out its offerings a little more slowly, and is partnering with various LLM developers. Startups such as AI21 Labs and Anthropic are also active. These will be important elements of what is a diversifying environment, and LLMs will be components in a range of platform services offered by these commercial players.

OpenAI chooses a bizarre and telling analogy here - building the model is 'more similar to training a dog than to ordinary programming.'

There is also a very active interest in smaller models, which may be run in local environments, or are outside of the control of the larger commercial players. Many of these are open source. There has been a lot of work based on LLaMA, a set of models provided by Meta (with names like Alpaca, Vicuna, and .... Koala). Current licensing restricts these to research uses, so there has also been growing activity around commercially usable models. Databricks recently released Dolly 2.0, an open source model which is available for commercial use. They also released the training data for use by others.

Mosaic ML, a company supporting organizations in training their models, has also developed and released several open source models which can be used commercially. It has also released what it calls an LLM Foundry, a library for training and fine-tuning models. There is a base model and some tuned models. Especially interesting is MPT-7B-StoryWriter-65k+, a model optimised for reading and writing stories.

Many others are also active here and many models are appearing. This was highlighted in the infamous leaked Google Memo. It underlined the challenge of smaller open source LLMs to Google, stressing that it has no competitive moat, and that, indeed, the scale of its activity may be slowing it down.

While our models still hold a slight edge in terms of quality, the gap is closing astonishingly quickly. Open-source models are faster, more customizable, more private, and pound-for-pound more capable. They are doing things with $100 and 13B params that we struggle with at $10M and 540B. And they are doing so in weeks, not months. // Google "We Have No Moat, And Neither Does OpenAI"

At the same time, many specialist models are also appearing. These may be commercial and proprietary, as in the PWC/Harvey and Bloomberg examples I mention elsewhere. Or they may be open or research-oriented, such as those provided by the National Library of Sweden, or proposed by Core (an open access aggregator) and the Allen Institute for AI. Intel and the Argonne National Laboratory have announced a series of LLMs to support the scientific research community, although not much detail is provided (at the time of writing). More general models may be also specialised through use of domain specific instruction sets at tuning stage. Google has announced specialised models for cybersecurity and medical applications, for example, based on its latest PaLM 2 model. Although it has not published many technical details, LexisNexis has released a product, Lexis+ AI, which leverages several language models, including, it seems, some trained on Lexis materials.

So, in this emergent phase there is much activity around a diverse set of models, tools and additional data. A race to capture commercial advantage sits alongside open, research and community initiatives. Hugging Face has emerged as an important aggregator for open models, as well as for data sets and other components. Models may be adapted or tuned for other purposes. This rapid innovation, development, and interaction between models and developers sits alongside growing debate about about transparency (of training data among other topics) and responsible uses.

A (currently) updated list of open source LLMs.

Refinement or specialization: Fine-tuning, moderation, and alignment

Following the 'unsupervised' generation of the large language model, it is 'tuned' in later phases. Tuning alters parameters of the model to optimise the performance of LLMs for some purpose, to mitigate harmful behaviors, to specialise to particular application areas, and so on. Methods include using a specialist data set targeted to a particular domain, provision of instruction sets with question/response examples, human feedback, and others. Retraining large models is expensive, and finding ways to improve their performance or to improve the performance of smaller models is important. Similarly, it may be beneficial to tune an existing model to a particular application domain. Developing economic and efficient tuning approaches is also an intense R&D focus, especially as more models targeted at lower power machines appear.

OpenAI highlights the use of human feedback to optimize the model.

The data is a web-scale corpus of data including correct and incorrect solutions to math problems, weak and strong reasoning, self-contradictory and consistent statements, and representing a great variety of ideologies and ideas.

So when prompted with a question, the base model can respond in a wide variety of ways that might be far from a user’s intent. To align it with the user’s intent within guardrails, we fine-tune the model’s behavior using reinforcement learning with human feedback (RLHF). // Open.AI

OpenAI also published safety standards and has a Moderation language model which it has also externalised to API users.

The Moderation models are designed to check whether content complies with OpenAI's usage policies. The models provide classification capabilities that look for content in the following categories: hate, hate/threatening, self-harm, sexual, sexual/minors, violence, and violence/graphic. You can find out more in our moderation guide. // Open.AI

OpenAI externalised the RLHF work to contractors, involving labor practices that are attracting more attention as discussed further below. Databricks took a 'gamification' approach among its employees to generate a dataset for tuning. Open Assistant works with its users in a crowdsourcing way to develop data for tuning [pdf]. GPT4All, a chat model from Nomic AI, asks its users can it capture interactions for further training.

Tuning data is also becoming a sharable resource, and subject to questions about transparency and process. For example, Stable AI reports how it has tuned an open source model, StableVicuna, using RLHF data from several sources, including Open Assistant.

Reinforcement Learning by Human Feedback is just parenting for a supernaturally precocious child.

— Geoffrey Hinton (@geoffreyhinton) March 15, 2023

Tweet by Geoffrey Hinton - major figure in neural network development.

The question of 'alignment', mentioned in the OpenAI quote, is key, although use of the term is elastic depending on one's view of threats. Tuning aims to align the outputs of the model with human expectations or values. This is a very practical issue in terms of effective deployment and use of LLMs in production applications. Of course it also raises important policy and ethical issues. Which values, one might ask? Does one seek to remove potentially harmful data from the training materials, or try to tune the language models to recognise it and respond appropriately? How does one train models to understand that there are different points of view or values, and respond appropriately? And in an age of culture wars, fake news, ideological divergences, and very real wars, 'alignment' takes on sinister overtones.

This work sits alongside the very real concerns that we do not know enough about how the models work to anticipate or prevent potential harmful effects as they get more capable. Research organization Eleuther.ai initially focused on providing open LLMs but has pivoted to researching AI interpretability and alignment as more models are available. There are several organizations devoted to alignment research (Redwood Research, Conjecture) and LLM provider Anthropic has put a special emphasis on safety and alignment. And several organizations more broadly research and advocate in favor of positive directions. Dair is a research institute founded by Timnit Gebru "rooted in the belief that AI is not inevitable, its harms are preventable, and when its production and deployment include diverse perspectives and deliberate processes it can be beneficial."

The return of content

Alex Zhavoronkov had an interesting piece in Forbes where he argues that content generators/owners are the unexpected winners as LLMs become more widely used. The value of dense, verified information resources increases as they provide training and validation resources for LLMs in contrast to the vast uncurated heterogeneous training data. Maybe naturally, he points to Forbes as a deep reservoir of business knowledge. He also highlights the potential of scientific publishers, Nature and others, whose content is currently mostly paywalled and isolated from training sets. He highlights not only their unique content, but also the domain expertise they can potentially marshall through their staffs and contributors.

Nature of course is part of the Holtzbrinck group which is also home to Digital Science. Zhavoronkov mentions Elsevier and Digital Science and notes recent investments which well position them now.

A good example from a different domain is provided by Bloomberg, which has released details of what it claims is the largest domain-specific model, BloombergGPT. This is created from a combination of Bloomberg's deep historical reservoir of financial data as well as from more general publicly available resources. It claims that this outperforms other models considerably on financial tasks, while performing as well or better on more general tasks.

“The quality of machine learning and NLP models comes down to the data you put into them,” explained Gideon Mann, Head of Bloomberg’s ML Product and Research team. “Thanks to the collection of financial documents Bloomberg has curated over four decades, we were able to carefully create a large and clean, domain-specific dataset to train a LLM that is best suited for financial use cases. We’re excited to use BloombergGPT to improve existing NLP workflows, while also imagining new ways to put this model to work to delight our customers.” // Bloomberg

The model will be integrated into Bloomberg services, and Forbes speculates that it will not be publicly released given the competitive edge it gives Bloomberg. Forbes also speculates about potential applications: creating an initial draft of a SEC filing, summarizing content, providing organization diagrams for companies, and noting linkages between people and companies; automatically generated market reports and summaries for clients; custom financial reports.

Bloomberg claims that it achieved this with a relatively small team. This prompts the question about what other organizations might do. I have mentioned some in the legal field elsewhere. Medical and pharmaceutical industries are obvious areas where there is already a lot of work. Would IEEE or ACS/CAS develop domain specific models in engineering and chemistry, respectively?

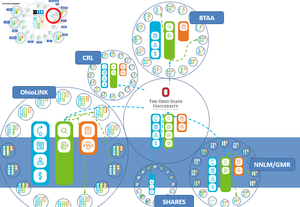

Libraries, cultural and research organizations have potentially much to contribute here. It will be interesting to see what large publishers do, particularly what I call the scholarly communication service providers mentioned above (Elsevier, Holtzbrinck/Digital Science, Clarivate). These have a combination of deep content, workflow systems, and analytics services across the research workflow. They have already built out research graphs of researchers, institutions, and research outputs. The National Library of Sweden has been a pioneer also, building models on Swedish language materials, and cooperating with other national libraries. I will look at some initiatives in this space in a future post.

Specialist, domain or national data sets are are also interesting in the context of resistance to the 'black box' nature of the current foundation models, unease about some of the content of uncurated web scrapes, and concern about the WEIRD (Western, Educated, Industrial, Rich, Democratic) attributes of current models. We will likely see more LLMs specialised by subject, by country or language group, or in other ways, alongside and overlapping with the push to open models.

Platform competition

There is a major commercial focus on providing services which in different ways allow development, deployment and orchestration of language models. It is likely that on-demand, cloud-based LLM platforms which offer access to foundation models, as well as to a range of development, customization, deployment and other tools will be important. The goal will be to streamline operations around the creation and deployment of models in the same way as has happened with other pieces of critical infrastructure.

OpenAI has pushed strongly to become a platform which can be widely leveraged. It has developed API and plugin frameworks, and has adjusted its API pricing to be more attractive. It has a clear goal to make ChatGPT and related offerings a central platform provider in this emerging industry.

Microsoft is a major investor in OpenAI and has moved quickly to integrate AI into products, to release Bing Chat, and to look at cloud-based infrastructure services. Nvidia is also a big player here. Predictably, Amazon has been active. It recently launched Bedrock ("privately customize FMs with your own data, and easily integrate and deploy them into your applications") and Titan (several foundation models), to work alongside Sagemaker, a set of services for building and managing LLM infrastructure and workflows. Fixie is a new platform company which aims to provide enterprise customers with LLM-powered workflows integrating agents, tools and data.

Again, the commercial stakes are very high here, so these and other companies are moving rapidly.

The position of Hugging Face is very interesting. It has emerged as a central player in terms of providing a platform for open models, transformers and other components. At the same time it has innovated around the use of models, itself and with partners, and has supported important work on awareness, policy and governance.

It will also be interesting to see how strong the non-commercial presence is. There are many research-oriented specialist models. Will a community of research or public interest form around particular providers or infrastructure?

Today we're thrilled to announce our new undertaking to collaboratively build the best open language model in the world: AI2 OLMo.

— Allen Institute for AI (@allen_ai) May 11, 2023

Uniquely open, 70B parameters, coming early 2024 – join us!https://t.co/9lQ2KYVC0v

A modest aspiration: the best open language model in the world [Tweet]

Guiding the model: prompt engineering and in-context learning

'Prompt engineering' has also quickly entered the general vocabulary. It has been found that results can be interactively improved by 'guiding' the LLM in various ways, by providing examples of expected answers for example. Much of this will also be automated as agents interact with models, multiple prompts are programmatically passed to the LLM, prompts are embedded in templates not visible to the use, and so on. A prompt might include particular instructions (asking the LLM to adopt a particular role, for example, or requesting a particular course of action if it does not know the answer), context (demonstration examples of answers, for example), directions for the format of the response, as well as an actual question.

Related to this, a major unanticipated 'emergent ability' is the improvement in responses that can be achieved by 'in context learning' : 'In context learning is a paradigm that allows language models to learn tasks given only a few examples in the form of demonstration.' It is 'in context' because it is not based on changing the underlying model, but on improving results in a particular interaction. The language model can be influenced by examples in inferring outputs. So, if you are asking it to develop a slogan for your organization, for example, you could include examples of good slogans from other organizations in the prompt.

A body of good practice is developing here, and, many guidelines and tools are emerging. Prompt engineering has been identified as a new skill, with learned expertise. It has also been recognized as analogous to coding - one instructs the LLM how to behave with sequences of prompts. (Although, again, bear in mind the remark above about training a dog!)

An important factor here is the size of the context window. The context window is the number of tokens (words or phrases) that can be input and considered at the same time by the LLM. One advance of GPT-4 was to enlarge the context window, meaning it could handle longer more complex prompts. However, recently we have seen major advances here. I note MPT-7B-StoryWriter-65k+ elsewhere, which is optimised for working with stories and can accept 65K tokens, large enough, for example, to process a novel without having to break it up into multiple prompts. The example they give is of inputting the whole of The Great Gatsby and having it write an epilogue. Anthropic has announced that their Claude service now operates with a context window of 100k tokens. One potential benefit of such large context windows is that it allows single documents to be input for analysis - they give examples of asking questions about a piece of legislation, a research paper, an annual report or financial statement, or maybe an entire codebase.

An overview of tools, guidelines and other resources produced by dair.ai

Building tools and workflows: agents

Discussion of agents is possibly the most wildly divergent in this space, with topics ranging from controlled business workflow solutions to apocalyptic science fiction scenarios in which autonomous agents do harm.

LLMs have structural limits as assistants. They are based on their training data, which is a snapshot in time. They are unconnected to the world, so they do not search for answers interactively or use external tools. They have functional limits, for example they do not do complex mathematical reasoning. And they typically complete only one task at a time. We are familiar, for example, with ChatGPT telling us that it is not connected to the internet and cannot answer questions about current events.

This has led to growing interest in connecting LLMs to external knowledge resources and tools. However, more than this, there is interest in using the reasoning powers of LLMs to manage more complex, multi-faceted tasks. This has led to the emergence of agents and agent frameworks.

In this context, the LLM is used to support a rules-based framework in which

- programming tools, specialist LLMs, search engines, knowledge bases, and so on, are orchestrated to achieve tasks;

- the natural language abilities of the LLM are leveraged to allow it to plan -- to break down tasks, and to sequence and connect tools to achieve them;

- the LLM may proceed autonomously, using intermediate results to generate new prompts or initiate sub-activities until it completes.

Auto-GPT has galvanized attention here. Several other frameworks have also appeared and the term Auto-GPT is sometimes used generically to refer to LLM-based agent frameworks.

This has led to growing interest in connecting LLMs to external knowledge resources and tools. However, more than this, there is interest in using the reasoning powers of LLMs to manage more complex, multi-faceted tasks. This has led to the emergence of agents and agent frameworks.

Langchain is a general framework and library of components for developing applications, including agents, which use language models. They argue that the most 'powerful and differentiated' LLM-based applications will be data-aware (connecting to external data) and will be agentic (allow an LLM to interact with its environment). Langchain facilitates the plug and play composition of components to build LLM-based applications, abstracting interaction with a range of needed components into Langchain interfaces. Components they have built include language and embedding models, prompt templates, indexes (working with embeddings and vector databases), identified agents (to control interactions between LLMs and tools), and some others.

Frameworks like this are important because they help facilitate the construction of more complex applications. These may be reasonably straightforward (a conversational interface to documentation or PDF documents, comparison shopping app, integration with calculators or knowledge resources) or may involve more complex, iterative interactions.

Hugging Face has also introduced an agents framework, Transformers Agent, which seems similar in concept to LangChain and allows developers to work with an LLM to orchestrate tools on Hugging Face. This is also the space where Fixie hopes to make an impact, using the capabilities of LLMs to allow businesses build workflows and processes. A marketplace of agents will support this.

This Handbook with parallel videos and code examples is a readable overview of LLM topics

At this stage, publicly visible agents have been relatively simple. The ability to use the language model to orchestrate a conversational interface, tools and knowledge resources is of great interest. We will certainly see more interactions of this kind in controlled business applications, on consumer sites, and elsewhere. Especially if they do in fact reduce 'stitching costs.' In a library context, one could see it used in acquisitions, discovery, inter library lending - wherever current operations depend on articulating several processes or tools.

Transparency and evaluation

As models are more widely used there is growing interest in transparency, evaluation and documentation, even if there aren't standard agreed practices or nomenclature. From the variety of work here, I mention a couple of initiatives at Hugging Face, given its centrality.

In a paper describing evaluation frameworks at Hugging Face, the authors provide some background on identified issues (reproducibility, centralization, and coverage) and review other work [pdf].

Evaluation is a crucial cornerstone of machine learning – not only can it help us guage whether and how much progress we are making as a field, it can also help determine which model is most suitable for deployment in a given use case. However, while the progress made in terms of hardware and algorithms might look incredible to a ML practitioner from several decades ago, the way we evaluate models has changed very little. In fact, there is an emerging consensus that in order to meaningfully track progress in our field, we need to address serious issues in the way in which we evaluate ML systems [....].

They introduce Evaluate (as set of tools to facilitate evaluation of models and datasets) and Evaluation on the hub (a platform that supports largescale automatic evaluation).

Hugging Face also provide Leaderboards, which show evaluation results. The Open LLM Leaderboard lists openly available LLMs against a particular set of benchmarks.

In related work, Hugging Face have also developed a framework for documentation, and have implemented Model Cards, a standardized approach to description with supporting tools and guidelines. They introduce them as follows:

Model cards are an important documentation framework for understanding, sharing, and improving machine learning models. When done well, a model card can serve as a boundary object, a single artefact that is accessible to people with different backgrounds and goals in understanding models - including developers, students, policymakers, ethicists, and those impacted by machine learning models.

They also provide a review of related and previous work.

Since Model Cards were proposed by Mitchell et al. (2018), inspired by the major documentation framework efforts of Data Statements for Natural Language Processing (Bender & Friedman, 2018) and Datasheets for Datasets (Gebru et al., 2018), the landscape of machine learning documentation has expanded and evolved. A plethora of documentation tools and templates for data, models, and ML systems have been proposed and developed - reflecting the incredible work of hundreds of researchers, impacted community members, advocates, and other stakeholders. //

Some of those working on the topics above were authors on the Stochastic Parrot paper. In that paper, the authors talk about 'documentation debt', where data sets are undocumented and become too large to retrospectively document. Interestingly, they reference work which suggests that archival principles may provide useful lessons in data collection and documentation.

There is clear overlap with the interests of libraries, archives, data repositories and other curatorial groups in this area. In that context, I was also interested to see a group of researchers associated with Argonne National Laboratory show how the FAIR principles can be applied to language models.

Given the black box nature of the models there is also concerns about how they are used to guide decisions in important life areas open to bias - in law enforcement, education, social services, and so on. There may be very little understanding of how important decisions are being made. This is a regulatory driver as shown in this Brookings discussion of developments in California and elsewhere.

Generally, the bills introduce new protections when AI or other automated systems are used to help make consequential decisions—whether a worker receives a bonus, a student gets into college, or a senior receives their public benefits. These systems, often working opaquely, are increasingly used in a wide variety of impactful settings. As motivation, Assemblymember Bauer-Kahan’s office cites demonstrated algorithmic harms in healthcare, housing advertising, and hiring, and there have unfortunately been many other such instances of harm. // Brookings

A review of LLM documentation approaches

Hardware

Several technical factors have come together in the current generation of LLMs. The availability of massive amounts of data through webscale gathering and the transformer architecture from Google are central. A third factor is the increased performance of specialist hardware, to deal with the massive memory and compute requirements of LLM processing. Nvidia has been an especially strong player here, and its GPUs are widely used by AI companies and inrastructure providers. However, again, given the belief in massively increased demand, others have also been developing their own custom chips to reduce reliance on Nvidia. The competitive race is on at the hardware level also. See announcements from Microsoft and AMD, for example, or Meta (which is also working on data center design 'that will be “AI-optimized” and “faster and more cost-effective to build”').

There is some speculation about the impact of quantum computing further out, which could deliver major performance improvements.

At the same time, many of the open source newer models are designed to run with much smaller memory and compute requirements, aiming to put them within reach of a broad range of players.

Research interconnections

There are strong interconnections between research universities, startups and the large commercial players in terms of shared research, development and of course the movement of people. Many of the papers describing advances have shared academic and industrial authorship.

Several years ago, this is how The Verge reported when neural network pioneers Hinton (recently left Google), Bengio and LeCun won the Turing Prize. And they have students and former collaborators throughout the industry.

All three have since taken up prominent places in the AI research ecosystem, straddling academia and industry. Hinton splits his time between Google and the University of Toronto; Bengio is a professor at the University of Montreal and started an AI company called Element AI; while LeCun is Facebook’s chief AI scientist and a professor at NYU. // The Verge

The Stanford AI Index for 2023 notes that industry produced 32 significant machine learning models in 2022, compared to 3 from academia. It cited the increased data and compute resources needed as a factor here. However, one wonders whether there might be some rebalancing in their next report given university involvement in the rise of open source models discussed above.

The leaked Google memo is interesting in this context:

But holding on to a competitive advantage in technology becomes even harder now that cutting edge research in LLMs is affordable. Research institutions all over the world are building on each other’s work, exploring the solution space in a breadth-first way that far outstrips our own capacity. We can try to hold tightly to our secrets while outside innovation dilutes their value, or we can try to learn from each other. // Google "We Have No Moat, And Neither Does OpenAI"

arXiv has become very visible given the common practice of disseminating technical accounts there. While this may give them an academic patina, not all then go through submission/refereeing/publication processes.

This role is recognised on the Hugging Face Hub. If a dataset card includes a link to a paper on arXiv, the hub will convert the arXiv ID into an actionable link to the paper and it can also find other models on the Hub that cite the same paper.

Hugging Face also has a paper notification service.

3 Social concerns

Calling for government regulation and referencing much reported recent arguments for a pause in AI development, a Guardian editorial summarises:

More importantly, focusing on apocalyptic scenarios – AI refusing to shut down when instructed, or even posing humans an existential threat – overlooks the pressing ethical challenges that are already evident, as critics of the letter have pointed out. Fake articles circulating on the web or citations of non-existent articles are the tip of the misinformation iceberg. AI’s incorrect claims may end up in court. Faulty, harmful, invisible and unaccountable decision-making is likely to entrench discrimination and inequality. Creative workers may lose their living thanks to technology that has scraped their past work without acknowledgment or repayment. // The Guardian view on regulating AI: it won’t wait, so governments can’t

Wrong or harmful results

We know that LLMs 'hallucinate' plausible-sounding outputs which are factually incorrect or fictitious. In addition, they can reflect biased or harmful views learned from the training data. They may normalize dominant patterns in their training data (doctors are men and nurses are women, for example).

In a discussion about their Imagen service, Google is very positive about the technical achievements and overall performance of the system ("unprecedented photorealism"). However, they are not releasing it because of concerns about social harm. I provide an extended quote here for two reasons. First, it is direct account of the issues with training data. And second, this is actually Google talking about these issues.

Second, the data requirements of text-to-image models have led researchers to rely heavily on large, mostly uncurated, web-scraped datasets. While this approach has enabled rapid algorithmic advances in recent years, datasets of this nature often reflect social stereotypes, oppressive viewpoints, and derogatory, or otherwise harmful, associations to marginalized identity groups. While a subset of our training data was filtered to removed noise and undesirable content, such as pornographic imagery and toxic language, we also utilized LAION-400M dataset which is known to contain a wide range of inappropriate content including pornographic imagery, racist slurs, and harmful social stereotypes. Imagen relies on text encoders trained on uncurated web-scale data, and thus inherits the social biases and limitations of large language models. As such, there is a risk that Imagen has encoded harmful stereotypes and representations, which guides our decision to not release Imagen for public use without further safeguards in place.

Preliminary assessment also suggests Imagen encodes several social biases and stereotypes, including an overall bias towards generating images of people with lighter skin tones and a tendency for images portraying different professions to align with Western gender stereotypes. Finally, even when we focus generations away from people, our preliminary analysis indicates Imagen encodes a range of social and cultural biases when generating images of activities, events, and objects. // Google

The discussion above is about images. Of course, the same issues arise with text. This was one of the principal strands in the Stochastic Parrot paper mentioned above, which was the subject of contention with Google:

In summary, LMs trained on large, uncurated, static datasets from the Web encode hegemonic views that are harmful to marginalized populations. We thus emphasize the need to invest significant resources into curating and documenting LM training data.

The authors note for example the majority male participation in Reddit or contribution to Wikipedia.

This point is also made in a brief review of bias on the Jisc National Centre for AI site, which notes the young, male and American character of Reddit. They look at research studies on GPT-3 outputs which variously show gender stereotypes, increased toxic text when a disability is mentioned, and anti-Muslim bias. They discussion bias in training data, and also note that it may be introduced at later stages - with RLHF, for example, and in training the Moderation API in OpenAI.

One disturbing finding of the BIG-bench benchmarking activity noted above was that "model performance on social bias metrics often grows worse with increasing scale." They note that this can be mitigated by prompting, and also suggest that the approach of the LaMDA model "to improve model safety and reduce harmful biases will become a crucial component in future language models."

Using Hugging Face tools to explore bias in language models

Authenticity, creation and intellectual property

LLMs raise fundamental questions about expertise and trust, the nature of creation, authenticity, rights, and the reuse of training and other input materials. These all entail major social, ethical and legal issues. They also raise concerns about manipulation, deliberately deceptive fake or false outputs, and bad actors. These are issues about social confidence and trust, and the broad erosion of this trust and confidence worries many.

There will be major policy and practice discussions in coming years. Think of the complexity of issues in education, the law, or medicine, for example, seeking to balance benefit and caution.

Full discussion is beyond my scope and competence, but here are some examples which illustrate some of these broad issues:

- Scientific journals have had to clarify policies about submissions. For example, Springer Nature added two policies to their guidelines. No LLM will be accepted as an author on the basis that they cannot be held accountable for the work they produce. And second, any use of LLM approaches needs to be documented in submitted papers. They argue that such steps are essential to the transparency scientific work demands.

- What is the intellectual and cultural record? For the first time, we will see the wholesale creation of images, videos and text by machines. This raises questions about what should be collected and maintained.

- Reddit and Stackoverflow have stated that they want compensation for being used as training data for Large Language Models. The potential value of such repositories of questions and answers is clear; what is now less clear is how much, if at all, LLM creators will be willing to pay for important sources. (It is interesting to note Arxiv and PLOS on some lists of training data inputs.) Of course, given the broad harvest of web materials in training data there is much that owners/publishers may argue is inappropriate use. Others may also join Reddit in this way, and some may take legal steps to seek to prevent use of their data.

- There is growing interest in apps and tools which can tell what creations are synthesised by AI. Indeed, OpenAI itself has produced one: "This classifier is available as a free tool to spark discussions on AI literacy." Although they note that it is not always accurate or may easily be tricked. Given concerns in education, it is not surprising seeing plagiarism detection company Turnitin provide support here as well.

- LLMs are potentially powerful scholarly tools in cultural analytics and related areas, prospecting recorded knowledge for meaningful patterns. However, such work will be influenced -- in unknown ways -- by the composition of training or instruction data. As noted above, a recent study showed that GPT-4's knowledge of books naturally reflects what is popular on the web, which turns out to include popular books out of copyright, science fiction/fantasy, and some other categories.

- The LLM model has at its core questions about what it is reasonable to 'generate' from other materials. This raises legal and ethical questions about credit, intellectual property, fair use, and about the creative process itself. A recent case involving AI-generated material purporting to be Drake was widely noted. What is the status of AI-generated music in response to the prompt: 'play me music in the style of Big Thief'? (Looking back to Napster and other industry trends, Rick Beato, music producer and YouTuber, argues that that labels and distributors will find ways to work with AI-generated music for economic reasons.) A group of creators have published an open letter expressing concern about the generation of art work: "Generative AI art is vampirical, feasting on past generations of artwork even as it sucks the lifeblood from living artists." The Getty case against Stability AI is symptomatic:

Getty Images claims Stability AI ‘unlawfully’ scraped millions of images from its site. It’s a significant escalation in the developing legal battles between generative AI firms and content creators. // The Verge

Questions about copyright are connected with many of these issues. Pam Samuelson summarises issues from a US perspective in the presentation linked to below. From the description:

The urgent questions today focus on whether ingesting in-copyright works as training data is copyright infringement and whether the outputs of AI programs are infringing derivative works of the ingested images. Four recent lawsuits, one involving GitHub’s Copilot and three involving Stable Diffusion, will address these issues.

She suggests that it will take several years for some of these questions to be resolved through the courts. In her opinion, ingesting in-copyright works for the purposes of training will be seen as fair use. She also covers recent cases in which the US Copyright Office ruled that synthesized art works were not covered by copyright, as copyright protection requires some human authorship. She described the recent case of Zarya of the Dawn, a graphic work where the text was humanly authored but the images were created using Midjourney. The Art Newspaper reports: "The Copyright Office granted copyright to the book as a whole but not to the individual images in the book, claiming that these images were not sufficiently produced by the artist."

Pamela Samuelson, Richard M. Sherman Distinguished Professor of Law, UC Berkeley, discusses several of the recent suits around content and generative AI.

The US Copyright Office has launched a micro-site to record developments as it explores issues around AI and copyright. And this is in one country. Copyright and broader regulatory contexts will vary by jurisdiction which will add complexity.

Relaxed ethical and safety frameworks

There was one very topical concern in the general warnings that Geoff Hinton sounded: that the release of ChatGPT, and Microsoft's involvement, had resulted in increased urgency within Google which might cause a less responsible approach. These echoes a general concern that the desire to gain first mover advantage – or not to be left behind – is causing Microsoft, Google and others to dangerously relax guidelines in the race to release products. There have been some high profile arguments within each company, and The New York Times recently documented internal and external concerns.

The surprising success of ChatGPT has led to a willingness at Microsoft and Google to take greater risks with their ethical guidelines set up over the years to ensure their technology does not cause societal problems, according to 15 current and former employees and internal documents from the companies. // NYT

The article notes how Google "pushed out" Timnit Gebru and Margaret Mitchell over the Stochastic Parrots paper, and discusses the subsequent treatment of ethics and oversight activities at both Google and Microsoft as they rushed to release products.

An interview with Timnit Gebru

Concentration effects: the rich get richer

We can see a dynamic familiar from the web emerging. There is a natural concentration around foundation models and the large infrastructure required to build and manage them. Think of how Amazon, Google and Facebook emerged as dominant players in the web. Current leaders in LLM infrastructure want to repeat that dominance. At the same time, there is an explosion of work around tools, apps, plugins, and smaller LLMs, aiming to diffuse capacity throughout the environment.

Despite this broad activity, there is a concern that LLM infrastructure will be concentrated in a few hands, which gives large players economic advantage, and little incentive to explain the internal workings of models, the data used, and so on. There are also concerns about the way in which this concentration may give a small number of players influence over the way in which we see and understand ideas, issues and identities, given the potential role of generative AI in communications, the media, and content creation.

Environmental impact of large scale computing

LLMs consume large amounts of compute power, especially during training. They contribute to the general concern about the environmental impact of large scale computing, which was highlighted during peak Blockchain discussions.

Big models emit big carbon emissions numbers – through large numbers of parameters in the models, power usage effectiveness of data centers, and even grid efficiency. The heaviest carbon emitter by far was GPT-3, but even the relatively more efficient BLOOM took 433 MWh of power to train, which would be enough to power the average American home for 41 years. // HAI, 2023 State of AI in 14 Charts

Sasha Luccioni, a researcher with the interesting title of 'climate lead' at Hugging Face, discusses these environmental impacts in a general account of LLM social issues.

There are also concerns about the way in which this concentration may give a small number of players influence over the way in which we see and understand ideas, issues and identities, given the potential role of generative AI in communications, the media, and content creation.

Hidden labor and exploitation

Luccioni also notes the potentially harmful effects of participation in the RLHF process discussed above, as workers have to read and flag large volumes of harmful materials deemed not suitable for reuse. The Jisc post below discusses this hidden labor in a little more detail, as large companies, and their contractors, hire people to do data labelling, transcription, object identification in images, and other tasks. They note that this work is poorly recompensed and that the workers have few rights. They point to this description of work being carried out in refugee camps in Kenya and elsewhere, in poor conditions, and in heavily surveilled settings.

Timnit Gebru and colleagues from the Dair Institute provide some historical context for the emergence of hidden labor in the development of AI, discuss more examples of this hidden labor, and strongly argue "that supporting transnational worker organizing efforts should be a priority in discussions pertaining to AI ethics."

Regulating

There has been a rush of regulatory interest, in part given the explosion of media interest and speculation. Italy temporarily banned ChatGPT pending clarification about compliance with EU regulations. The ban is now lifted but other countries are also investigating. The White House has announced various steps towards regulation, for example, and there is a UK regulatory investigation.

Italy was the first country to make a move. On March 31st, it highlighted four ways it believed OpenAI was breaking GDPR: allowing ChatGPT to provide inaccurate or misleading information, failing to notify users of its data collection practices, failing to meet any of the six possible legal justifications for processing personal data, and failing to adequately prevent children under 13 years old using the service. It ordered OpenAI to immediately stop using personal information collected from Italian citizens in its training data for ChatGPT. // The Verge

Lina Kahn, the chair of the US Federal Trade Commission, has compared the emergence of AI with that of Web 2.0. She notes some of the bad outcomes there, including concentration of power and invasive tracking mechanisms. And she warns about potential issues with AI: firms engaging in unfair competition or collusion, 'turbocharged' fraud, automated discrimination.

The trajectory of the Web 2.0 era was not inevitable — it was instead shaped by a broad range of policy choices. And we now face another moment of choice. As the use of A.I. becomes more widespread, public officials have a responsibility to ensure this hard-learned history doesn’t repeat itself. // New York Times

Chinese authorities are proposing strong regulation, which goes beyond current capabilities, according to one analysis:

It mandates that models must be “accurate and true,” adhere to a particular worldview, and avoid discriminating by race, faith, and gender. The document also introduces specific constraints about the way these models are built. Addressing these requirements involves tackling open problems in AI like hallucination, alignment, and bias, for which robust solutions do not currently exist. // Freedom to Tinker, Princeton's Center for Information Technology Policy

This chilling comment by the authors underlines the issues around 'alignment':

One could imagine a future where different countries implement generative models trained on customized corpora that encode drastically different worldviews and value systems.

Tim O'Reilly argues against premature regulation

While there is general agreement that regulation is desirable, much depends on what it looks like. Regulation is more of a question at this stage than an answer; it has to be designed. One would not want to design regulations in a way that favors the large incumbents, for example, by making it difficult for smaller players to comply or get started.

This point is made by Yannic Kilcher (of Open Assistant) in a video commentary on US Senate hearings on AI oversight. He goes on to argue that openness is the best approach here, so that people can see how the model is working and what data has been used to train it. Stability AI also argue for openness in their submission to the hearings: "Open models and open datasets will help to improve safety through transparency, foster competition, and ensure the United States retains strategic leadership in critical AI capabilities."

Finally, I was interested to read about AI-free spaces or sanctuaries.

To implement AI-free sanctuaries, regulations allowing us to preserve our cognitive and mental harm should be enforced. A starting point would consist in enforcing a new generation of rights – “neurorights” – that would protect our cognitive liberty amid the rapid progress of neurotechnologies. Roberto Andorno and Marcello Ienca hold that the right to mental integrity – already protected by the European Court of Human Rights – should go beyond the cases of mental illness and address unauthorised intrusions, including by AI systems. // Antonio Pele, It’s time for us to talk about creating AI-free spaces, The Conversation

The impact on employment

One of the factors in the screen writers' strike in Hollywood is agreement over uses of AI, which could potentially be used to accelerate script development or in other areas of work. However, according to The Information: 'While Hollywood Writers Fret About AI, Visual Effects Workers Welcome It.' And at the same time, studios are looking at AI across the range of what they do - looking at data about what to make, who to cast, how to distribute; more seamlessly aging actors, dubbing or translating; and so on.

I thought the example interesting as it shows impact in a highly creative field. More generally the impact on work will again be both productive and problematic. The impact on an area like libraries, for example, will be multi-faceted and variable. This is especially so given the deeply relational nature of the work, closely engaged with research and education, publishing, the creative industries and a variety of technology providers.

While it is interesting to consider reports like this one from Goldman Sachs or this research from Ed Felten and colleagues which look at the exposure of particular occupations to AI, it is not entirely clear what to make of them. One finding from the latter report is "that highly-educated, highly-paid, white-collar occupations may be most exposed to generative AI."

Certainly, predictions like the one in the Goldman Sachs report that up to a quarter of jobs might be displaced by AI are driving regulator interest. Which is fueled also by headline-grabbing statements from high profile CEOs (of British Telecom and IBM, for example) forecasting reduced staff as AI moves into backoffice and other areas. There will be increased advocacy and action from industry groups, unions, and others. It

There are very real human consequences in terms of uncertainty about futures, changing job requirements, the need to learn new skills or to cope with additional demands, or to face job loss. This follows on several stressful years. The cumulative effect of these pressures can be draining, and empathy can be difficult. However, empathy, education and appropriate transparency will be critical in the workplace. This is certainly important in libraries given the exposure to AI in different ways across the range of services.

However, empathy, education and appropriate transparency will be critical in the workplace.

Major unintended event

LLMs will manage more processes; judgements are based on chatbot responses; code written by LLMs may be running in critical areas; agents may initiate unwelcome processes. Given gaps in knowledge and susceptibility to error, concerns have been expressed about over-reliance on LLM outputs and activities.

One response is at the regulatory level, but individual organizations will also have to manage risk in new ways, and put in place procedures and processes to mitigate potential missteps. This has led to yet another word becoming more popular: 'guardrails.' Guardrails are programmable rules or constraints which guide the behavior of an LLM-based application to reduce undesired outcomes. However, it is not possible to anticipate all the ways in which such precautions would be needed.

Beyond this, there are broader concerns about the malicious use of AI where serious harm may be caused. In crime or war settings, for example. So-called 'dual use' applications are a concern, where an LLM may be used for both productive and malicious purposes. See for example this discussion of an agent-based architecture "capable of autonomously designing, planning, and executing complex scientific experiments" [pdf]. While the authors claim some impressive results, they caution against harmful use of such approaches, and examine the potential use of molecular machine learning models to produce illicit drugs or chemical weapons. The authors call for the AI companies to work with the scientific community to address these dual use concerns.

Concerns are also voiced by those working within generative AI. As mentioned above, Geoffrey Hinton, a pioneer of neural networks and generative AI, stepped down from his role at Google so that he might comment more freely about what he saw as threats caused by increased commercial competition and potential bad actors.

Hinton's long-term worry is that future AI systems could threaten humanity as they learn unexpected behavior from vast amounts of data. "The idea that this stuff could actually get smarter than people—a few people believed that," he told the Times. "But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that." // Ars Technica

This received a lot of public attention, and I mentioned some of these concerns in relationship to 'alignment' above. Anthropic is the creator of the Claude model and is seen as a major competitor to Google and OpenAI. This is from the Anthropic website:

So far, no one knows how to train very powerful AI systems to be robustly helpful, honest, and harmless. Furthermore, rapid AI progress will be disruptive to society and may trigger competitive races that could lead corporations or nations to deploy untrustworthy AI systems. The results of this could be catastrophic, either because AI systems strategically pursue dangerous goals, or because these systems make more innocent mistakes in high-stakes situations. // Anthropic

Conclusion

As I write, announcements come out by the minute, of new models, new applications, new concerns.

Four capacities seem central (as I think about future library applications):

- Language models. There will be an interconnected mix of models, providers and business models. Large foundation models from the big providers will live alongside locally deployable open source models from commercial and research organizations alongside models specialized for particular domains or applications. The transparency, curation and ownership of models (and tuning data) are now live issues in the context of research use, alignment with expectations, overall bias, and responsible civic behavior.

- Conversational interfaces. We will get used to conversational interfaces (or maybe 'ChUI' - the Chat User Interface). This may result in a partial shift from 'looking for things' to 'asking questions about what one wants to know' in some cases. In turn this means that organizations may begin to document what they do more fully on their websites, given the textual abilities of discovery, assistant, and other applications.

- Agents and workflows. The text interfacing capacities of LLMs will be used more widely to create workflows, interaction with tools and knowledge bases, and so on. LLMs will support orchestration frameworks for tools, data and agents to build workflows and applications that get jobs done.

- Embeddings and Vector databases. Vector databases are important for managing embeddings which will drive new discovery and related services. Embedding APIs are available from several organizations.

Several areas stand out where there is a strong intersection between social policy, education and advocacy:

- Social confidence and trust: alignment. Major work needs to be done on alignment at all levels, to mitigate hallucination, to guard against harmful behaviors, to reduce bias, and also on difficult issues of understanding and directing LLM behaviors. However, there will be ongoing concern that powerful capacities are available to criminal or political bad actors, and that social trust and confidence may be eroded by the inability to distinguish between human and synthesized communications.

- Transparency. We don't know what training data, tuning data and other sources are used by the foundation models in general use. This makes them unsuitable for some types of research use; it also raises more general questions about behaviors and response. We also know that some services capture user prompts for tuning. Tolerances may vary here, as the history of search and social apps have shown, but it can be important to know what one is using. Models with more purposefully curated training and tuning data may become more common. Documentation, evaluation and interpretability are important elements of productive use, and there are interesting intersections here with archival and library practice and skills.

- Regulation. Self regulation and appropriate government activities are both important. The example of social media is not a good one, either from the point of view of vendor positioning or the ability to regulate. But such is the public debate that regulation seems inevitable. Hopefully, it will take account of lessons learned from the Web 2.0 era, but also proceed carefully as issues become understood.

- Hidden labour and exploitation. We should be aware of the hidden labour involved in building services, and do what we can as users and as buyers to raise awareness, and to make choices.

Generative AI is being deployed and adopted at scale. It will be routine and surprising, productive and problematical, unobtrusive and spectacular, welcome and unwelcome.

I was very struck by the OpenAI comment that training the large language model is more like training a dog than writing software. An obvious rejoinder is that we hope it does not bite the hand that feeds it. However, it seems better to resist the comparison and hope that the large language model evolves into a responsibly managed machine which is used in productive ways.