Well, another very fine issue of the Code4Lib Journal has appeared.

Jody L DeRidder has an interesting piece describing how they used browsable link pages (by subject, name, ..) and sitemaps to improve the visibility of a particular resource to search engines. The discussion gets into some of the issues of trying to get crawled, indexed, and then ranked: decision criteria may be applied by the search engines at each of these steps. Tony Boston, then with the National Library of Australia, published an article a while ago on experiences at the National Library of Australia in exposing materials to search engines and includes some pointers based on lessons learned. I hope that we see more of these types of articles as SSEO (social/search engine optimization) is a topic of growing importance for libraries. Here is sentence from Jody’s conclusion:

Within three months of completion of this project, over 4 times as many hits and over 5 times as many users were recorded in a month as had ever been previously measured. [The Code4Lib Journal – Googlizing a Digital Library]

Update: See also the following article in the current issue of Dlib Magazine. Clearly articles on digital library search engine optimization are like buses. None comes for ages, and then several come together.

Site Design Impact on Robots: An Examination of Search Engine Crawler Behavior at Deep and Wide Websites

Joan A. Smith and Michael L. Nelson, Old Dominion University

doi:10.1045/march2008-smith

[D-Lib Magazine (March/April 2008)]

And I should mention that my colleagues have an article on metadata crosswalking in this issue of Code4Lib also:

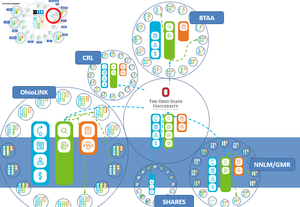

This paper discusses an approach and set of tools for translating bibliographic metadata from one format to another. A computational model is proposed to formalize the notion of a ‘crosswalk’. The translation process separates semantics from syntax, and specifies a crosswalk as machine executable translation files which are focused on assertions of element equivalence and are closely associated with the underlying intellectual analysis of metadata translation. A data model developed by the authors called Morfrom serves as an internal generic metadata format. Translation logic is written in an XML scripting language designed by the authors called the Semantic Equivalence Expression Language (Seel). These techniques have been built into an OCLC software toolkit to manage large and diverse collections of metadata records, called the Crosswalk Web Service. [The Code4Lib Journal]